The PM's Guide to RICE Prioritization

As product managers, we have limited resources. We can’t pursue every initiative at once. Therefore, a key part of the product manager’s job is to prioritize.

Too often, product managers try to answer the question “is this initiative a good initiative or a bad initiative?” - but that’s not the right question to answer.

Rather, the right question to answer is “which sequence of initiatives will unlock maximum value for customers, users, and the business?”

In other words, product management isn’t just about identifying which bets to make. Product managers must select the right sequence of bets that will drive the highest return on investment (ROI).

The art and science of prioritization enables us as product managers to make consistent, defensible calls on which initiative to ship next.

Therefore, in this essay, we’re going to cover the RICE prioritization framework. We’ll discuss what RICE is (as well as who first proposed this framework), how to use it, how not to use it, and how to customize it to your organization’s specific needs. As you leverage the RICE prioritization framework, you’ll increase the value of the initiatives that you ship.

But before we jump into the details of RICE, let’s take a step back and clarify the value that prioritization provides.

Why prioritization matters for product managers

The end outcome of prioritization is a stack-ordered sequence. That is, the goal is to get to an ordered list that has no ties. As product managers, we should never have multiple “first priorities,” because having more than a single priority at a time means that nothing is truly important.

This ranked list of initiatives is incredibly valuable for product managers because it eliminates mental overhead. Once we have a ranked list, we can focus design and engineering efforts on the highest-impact work without being distracted by less important requests.

Furthermore, this prioritized list also helps to align stakeholders and customers towards “what are we doing next.” When we maintain a prioritized list of bets, we bring stakeholders and customers along the product discovery journey.

We’re better positioned to explain shifts in priorities over time, based on new learnings that we discover about the market, about customer pains, and about our technology. We can discuss the inputs that went into the sequence of initiatives, and when we offer up transparency as product managers, we help customers and stakeholders feel heard and accounted for.

When everyone is aligned on the same method of prioritization, we observe reduced friction and fewer instances of conflict. That then enables us as a product team to focus more time on execution and strategy, as we spend less time needing to negotiate in tense situations that were avoidable and preventable.

We now know why prioritization is immensely impactful for product managers. But, why don’t we use it more?

It’s because prioritization is hard. Let’s break down why that’s the case.

Why prioritization is hard

Prioritization is a difficult task because there are so many potential factors at play that you could use to prioritize.

Since product managers stand at the intersection of customers, makers (i.e. designers and engineers), and the business, we have a nearly infinite set of factors to consider in deciding what to ship next.

Some examples of factors we might need to balance as PMs:

Customers: customer acquisition, customer retention, testimonials and customer sentiment, virality, customer lifetime value, customer alternatives

Makers: innovation, scalability, reliability, abstraction, speed, effort, quality

Business: revenues, profits, costs, payback time, competitive landscape, partnerships

Even this abbreviated list of factors is overwhelming for human minds to comprehensively address. How might we make prioritization less difficult, then?

I’ve seen some people use prioritization checklists, but I can tell you firsthand that checklists won’t help with prioritization.

The problem with checklists is that their end output is a binary result of “good investment” or “not a good investment.” But, prioritization is about sequencing, not about “good or bad”.

In fact, many frameworks taught in business schools are essentially checklists - they will help you get an answer of “good” or “bad”, but they won’t resolve the issue of prioritization.

Here’s a non-exhaustive set of frameworks I’ve seen people attempt to use to prioritize their products: SWOT analysis, 4 P’s analysis, PEST analysis, growth/share matrix, Ansoff matrix, GE / McKinsey matrix, 3C’s analysis

Take it from me: as an ex-management consultant, I tried using these frameworks early on in my product management career to drive prioritization. But, they inevitably failed me, because none of them drive a stack-ranked sequence of work.

Well, what if we used a single-criterion lens to assess our initiatives? Wouldn’t a simpler view help us get to a strictly-ordered sequence? Unfortunately, views that are too simplistic can also cause issues.

Some organizations use the principle of “ship the smallest wins”, where they only look at “projected effort” and ship the lowest-effort initiatives first.

But, the problem with this approach is that many high-value product initiatives (i.e. those that unlock multiplicative value) require sustained effort, and therefore these crucial initiatives never get shipped.

On the other end of the spectrum, some organizations use the principle of “ship the features that unlock the most immediate revenue.”

But, this approach is also problematic. It leads to chasing monetization rather than investing in strategic bets, and this investment portfolio becomes linear, fractured, and project-oriented rather than multiplicative, synergistic, and product-oriented.

Yet other organizations use criteria such as “which initiative uses the most advanced technology?” (e.g. blockchain, crypto, AI/ML, etc.)

But, advanced technology itself is not the real value driver. If you could use less-advanced technology to ship the same amount of business value in 10x less time, then betting on advanced technology is likely not the right call.

Ultimately, prioritization is difficult due to two root problems:

Prioritization relies on qualitative information that can’t be easily quantified

Many initiatives are “apples-to-oranges” comparisons, which makes it difficult to reason about tradeoffs

Therefore, if we can find a way to quantify qualitative info and use a consistent model, that will help us complete the ultimate goal of prioritization: getting to a strict rank order.

At this point, many people will conclude that the best way to prioritize is to use a highly-sophisticated model that takes in dozens of inputs. They’ll advocate for team-specific prioritization models that yield dollar values as an end result.

That’s not the right call. Here’s why.

The value of simplifying and standardizing prioritization efforts

Standardization on a consistent prioritization model is crucial to organizations. You need to use the same model across your organization so that stakeholders from all departments and business lines can fully understand the levers that went into prioritization.

When prioritization models are customized team-by-team, it causes a loss of alignment and a loss of context for non-product teams. When non-product teams lose faith in their product teams, organizations lose significant velocity due to internal battles.

When product teams don’t speak the same language with non-product teams, customers and stakeholders will not be aligned with your decision-making process. That then causes them to accuse you of being inconsistent or unfair.

And, the time that you spend trying to onboard them onto your specific flavor of prioritization (and discuss how your prioritization is different from your sister teams’ prioritization models) will only serve to irritate them more, rather than provide them with a satisfying conclusion.

So, prioritization models must be consistent across the entire product org. That is, each product team can have a distinct charter or problem area to work on, but they should not use different methods of initiative prioritization. We shouldn’t let each product team come up with exotic team-specific inputs, as that will drive disunity and confusion across the organization.

Furthermore, prioritization models need to be quick and straightforward. They shouldn’t take weeks of dedicated work to complete.

Here’s why: you get huge diminishing marginal returns when it comes to prioritization.

The top 3 initiatives that you fund as a product manager are more important than all other initiatives combined. Similarly, the sequence of these top 3 initiatives is more important than the sequence of all other initiatives.

That’s because everything outside of “the top 3 initiatives” likely won’t be funded within the next quarter.

Too frequently, product teams go off on a fact finding mission to get the exact dollar value of benefits and the exact dollar estimate of costs. But that’s not actually helpful, because all you need is for the sequence to be right. In other words, it’s more important to ship high impact bets in sequence than it is to get the absolute exact calculation for any given bet.

Let’s talk about the quantifiable loss that happens when product teams try to get their prioritization models to be “exactly right” with highly accurate inputs.

Imagine a 5 PM product team that runs quarterly planning (i.e. 4 times per year). They use a highly-sophisticated revenue attribution model that enables them to forecast revenues, costs, and profitability for the next 5 years across a variety of assumptions and scenarios.

Imagine that this estimation exercise takes 3 weeks of full-time effort per product manager as they go on fact-finding missions. 3 weeks per quarter X 4 quarters per year X 5 PMs = 60 product manager weeks per year are allocated towards fact-finding. We’ve essentially spent more than one full-time employee’s worth of time to run this exercise.

Now let’s imagine that we used a highly simplified model to just get to a ranking. This model doesn’t use a specific estimated number of dev-months or granular revenue forecasts, and each initiative takes a maximum of 30 minutes to assess.

In this alternative model, each PM should be able to quickly sort their initiatives within a single day rather than needing 3 weeks. That comes out to only 1 day per quarter X 4 quarters year year X 5 PMs = 20 product manager days per year, which is 15x more efficient than the previous method.

Remember: the Pareto principle suggests that we can get 80% of the value of prioritization by using only 20% of the effort. As long as you can consistently get the most crucial initiatives to bubble to the top, you no longer need to flesh out the business case for less-crucial initiatives.

After you’ve locked in the top-priority initiatives, you can spend time later to launch deep fact-finding missions and specific revenue projections for our top three initiatives, so that we can establish the business case for our leadership teams to fund our highest-impact bets.

In this approach, we get granularity only for the initiatives that we’re tackling next, and not trying to get granularity for all possible initiatives - many of which never make “the cut line” and therefore are never worked on.

So, summarizing what matters in prioritization:

We need to quantify qualitative information

We need a consistent set of parameters for assessing initiatives

We need to use the same prioritization model across the product org

We need to use the simplest model possible to maximize the efficacy of our time

So, now that we’re aligned on the cornerstones of prioritization, we can now talk about the RICE prioritization framework.

The RICE prioritization framework

RICE was first proposed by Sean McBride at Intercom as a way to identify and quantify the most important inputs for prioritization. RICE stands for the four key components that are used to assess value:

Reach: how many users are ultimately affected by a bet

Impact: magnitude of improvement for users from this bet

Confidence: amount of evidence backing up your hypothesis

Effort: design and engineering work required to bring this bet to life

Using McBride’s original methodology, you calculate a RICE score using this formula:

R x I x C / E

Then, you rank your initiatives from highest RICE score to lowest RICE score.

Essentially, RICE is a more granular version of the standard ROI calculation, which is simply “benefits divided by costs.” The power of breaking up “benefits” into the three subfactors of reach, impact, and confidence is that we have a more reasoned way to compare different kinds of benefits.

While McBridge’s original model is quite valuable, it uses some highly-granular numbers that take too long to gather for the purposes of high-level prioritization. That is, McBride proposes that we gather specific numbers on “how many users will be impacted by this initiative” and “how many dev months will it take to ship”, but collecting these details sacrifices too much time and doesn’t meaningfully change the sequencing of initiatives.

Therefore, we can make the RICE model even more powerful than originally proposed, by simplifying it even further.

Simplifying the RICE model

Our objective is to use the most simplified version possible for each of the components of RICE, so that we can maximize our time-to-signal ratio. That is, we should be able to get to a clear answer whether a particular initiative is part of the top three bets to ship, where they then warrant deeper analysis to establish the business case.

For each of the four RICE components, we’ll use broad bucketing to get to a kind of T-shirt sizing (e.g. small, medium, large) that helps us quickly trade away non-impactful bets.

Reach

When we think about the reach of a given initiative, we want to evaluate its ultimate audience. That is, while you might use A/B testing for its initial implementation, you want to consider how large of a swath of your audience it will touch once you’ve rolled it out.

The three classifications we can use here, as well as their associated scores:

Everyone in your current product (4 points)

Some of the users in your current product (2 points)

New users who aren’t in your product right now (1 point)

For transparency, here are some of the simplifications we made.

Simplification #1: we didn’t consider whether users would use this functionality multiple times, or only once per user.

Simplification #2: we didn’t decide on a specific time interval, e.g. “number of users touched per quarter.”

Simplification #3: we didn’t differentiate between different kinds of users and their associated segment sizes. For example, for B2B products, “administrative users” typically make up only 1-5% of the total user base, so a feature that targets admin users would still be considered as “some of the users in your current product.”

Simplification #4: we didn’t evaluate the different sizes of customer accounts, which can be important for B2B products where one customer might be worth 10 smaller accounts in terms of revenue potential.

Again, I’m not saying that details aren’t valuable! Rather, I’m saying that details aren’t immediately required for identifying the right sequence of work.

You should absolutely use details to build an investment thesis for your executive team, but only once you’ve confirmed that the initiative is a top three initiative that you plan on tackling immediately. When it comes to prioritization, however, you don’t need this level of granularity.

Impact

Projected impact is typically something that takes days (if not weeks) of modeling to accurately assess. But, for the purposes of prioritization, we can quickly arrive at a sense of “how large is the impact of this initiative” by having quick, informal conversations with peer PMs, tech leads, designers, and our direct managers.

The three classifications we can use here, as well as their associated scores:

Game changer (4 points)

Significant value (2 points)

Some value (1 point)

Note that we excluded “low value” (0.5 points) and “trivial value” (0.25 points) from this assessment, since those items don’t really belong on a roadmap in the first place.

These kinds of micro-initiatives are better discussed at sprint planning meetings (where you might have 1-2 extra engineering days to knock out a small value-add), but really shouldn’t be visible for customers or executives to consider at a strategic level.

Also, note that impact should be assessed at the product org level, and not at the feature level. Your ultimate goal as a product manager is to move the entire product portfolio forward. Therefore, while many initiatives might be considered “game changers” for features, they certainly aren’t “game changers” at a product portfolio level.

For transparency, here are some of the simplifications we made by using this three-bucket scale.

Simplification #1: we’ve made no attempts at revenue forecasting or attribution modeling.

Simplification #2: we haven’t broken out impact by customer segment or customer type.

Simplification #3: we don’t attempt to differentiate between platform investments (e.g. engineering refactors and scalability) vs. user-facing investments (e.g. new functionality or UX redesigns).

Confidence

All else equal, we should ship lower-risk items over higher-risk items, if and only if they have similar ratios of benefits vs. costs.

Confidence and risk are two sides of the same coin. We should prioritize initiatives that are backed by both qualitative evidence and quantitative data, as we have more confidence in them (since we’ve already de-risked them with real-world learnings).

Therefore, we can use this 3-part scale:

High confidence (80%) - this bet is backed by extensive qualitative feedback and quantitative metrics

Medium confidence (50%) - this bet has either extensive qualitative feedback or extensive quantitative metrics, but not both

Low confidence (30%) - this bet has some qualitative feedback and/or quantitative metrics, but not enough that we’d make a public blog post or press release about it

Note that we’ve excluded “guesses” (10%) from this methodology, since a guess requires more validation from product managers before attempting to consider them vs. other initiatives.

You’ll find that we’ve modified the percentages that McBride used for confidence - his original percentages were 100% for high confidence, 80% for medium confidence, and 50% for low confidence.

However, due to the human tendency towards confirmation bias (where we believe that we’re more correct than we actually are), we’ve reduced the confidence levels for each category.

Here are some simplifications we’ve made here.

Simplification #1: some forms of evidence are more valuable than other forms of evidence, but we won’t consider that context for prioritization purposes. (For example, “pre-order testing” is typically very strong evidence since customers are committed to purchasing, whereas “surveys” are typically weaker evidence because customers have committed to nothing.)

Simplification #2: we’re ignoring that real-world confidence is on a spectrum rather than in buckets. Therefore, we should never use these confidence estimates when presenting to customers or executives.

Simplification #3: we don’t consider market trends, industry analysis, or competitor action.

Effort

For the purposes of prioritization, most engineering managers will have an intuitive sense of “what order of magnitude we’re talking about” for any given initiative. Therefore, we don’t need to get into debates of whether an initiative will take 3.5 dev months or 4.5 dev months.

Instead, we can use this four-part scale for effort:

Large effort, i.e. more than one dev-year (4 points)

Medium effort, i.e. more than one dev-quarter (2 points)

Small effort, i.e. 1-3 dev months (1 point)

Trivial effort, i.e. less than 1 dev month (0.5 points)

I’ve had people object that 1 dev-year should be 12 times larger than 1 dev-month, since the math suggests that to be true. However, the reason we don’t do so is that estimates have error bars, and larger estimates have larger error bars.

Many times, an estimate of 1 dev-year could be anything between 6 dev-months to 3 dev-years in reality. Many times, an 1 dev-quarter could be anything between 1 dev-month to 1 dev-year in reality.

Therefore, we’re using a basic “powers of two” scaling method for each bucket. Note that this simplification balances out our reach estimates and our impact estimates too! After all, “huge impact” could increase a metric anywhere from 10% to 200% in absolute terms, and “large impact” could increase a metric anywhere from 1% to 20% in absolute terms.

There’s only one simplification we need to keep in mind with this exercise. We’re not using these estimates as project estimates for dependency evaluation or sprint planning. Rather, we’re looking at broad categories of effort, because our time horizon for prioritization is between 1 quarter to 1 year out.

So, now we know how to calculate RICE scores. Let’s pull this together into an actionable template that you can use day-to-day on the job.

A reusable template for the simplified RICE model

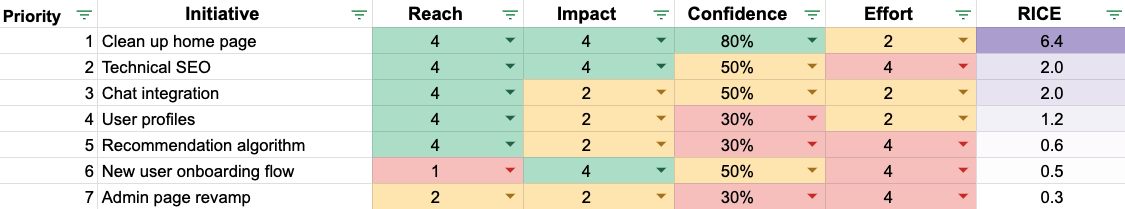

We’ve created a Google spreadsheet that you can use to fill out your own RICE models. Here’s a preview of what it looks like:

Google spreadsheet template for calculating RICE scores

Now you’ve got a way to track your initiatives. But, as a product manager, you should always be seeking to increase return on investment, even for initiatives that you’ve already prioritized.

So, let’s talk about how to increase the impact of your work. The best way to do so is to use the 4 factors that we’ve already discussed as part of the RICE methodology.

Ways to improve RICE scores

As a product manager, you essentially have 4 levers to increase the value of your initiatives: increase reach, increase impact, increase confidence, or decrease effort.

To increase reach, look for ways to scale your impact to more users overall, but without significantly increasing effort.

For B2B products, you can use tactics like configurations (enabling different customers to have different experiences), abstraction (enabling other product teams to leverage your work), and training and enablement (empowering customer-facing teams to successfully implement your products within their customers).

For B2C products, you can use tactics like virality (enabling products to spread from current users to new users) and partnerships (accessing your partner’s user base and allowing them to access your user base).

To increase impact, look for ways to solve a deeper customer pain than the pain they said they had. As an example, giving users the ability to “turn off all notifications” is more powerful than forcing users to have to turn off each notification one at a time.

To increase confidence, gather additional research and data to back up your product bet. Run in-product analytics to get a stronger grasp of how features interact with one another, and leverage user interviews, user feedback, and rapid prototyping to prove that your bet will solve a real pain.

To decrease effort, find ways to eliminate low-value-add work from any feature set. After all, most feature sets contain a mix of high-value work and low-value work, and the low-value work can always be punted to future iterations.

Note that when you consider how to decrease effort, try not to cut away engineering work that enables abstraction, scalability, and reusability. That’s because these efforts increase the impact of your bets. At the end of the day, you don’t want to ship functionality that no one else in your product organization can take advantage of!

Now we understand how to maximize the impact of our work through the lens of RICE. But, let’s carefully consider the caveats that are inherent to the RICE prioritization framework.

Caveats of the RICE prioritization framework

Every framework is a simplification of messy realities, and therefore every framework comes with caveats. Let’s identify key caveats to keep in mind as we implement the RICE prioritization framework within our organizations.

RICE prioritization maximizes the ratio of time vs. quality of decision, and it doesn’t maximize accuracy at all costs.

The time horizon for initiatives to consider using RICE prioritization is between 1 quarter to 3 years.

RICE prioritization does not replace product strategy.

RICE prioritization does not replace sprint planning.

RICE prioritization is living and breathing, and initiative ranking will change over time as you gather more information.

RICE prioritization is a guideline and not a mandate to ship initiatives in strict sequence order.

Let’s cover each of these caveats.

Caveat #1: RICE helps us balance speed vs. quality of decision. RICE scores are not immutable truths.

Once we’ve identified the top priorities, we should take the time to flesh out the details and business case for the top priorities. RICE prioritization helps the top priorities rise to the top of the list, but it’s not meant to replace any sort of financial forecasting.

If you truly need accuracy at all costs, e.g. you’re considering acquiring a competitor or you’re trying to set the price for your products, RICE is not the right tool for this scenario.

Caveat #2: RICE prioritization is valuable only when looking at medium-term sequencing in the 1 quarter to 3 year timeframe.

If you’re working on strategies that span years or decades, the market landscape and the technological landscape will change significantly within that time. In this scenario, focus more on strategic themes and product vision, rather than using RICE prioritization to sequence initiatives.

Caveat #3: RICE prioritization is not a product strategy. Strategy defines how we will achieve a given product vision, whereas roadmapping and sequencing defines how we will achieve a specified strategy.

If you’re looking to establish a product strategy, use the product strategy one-pager format instead.

Caveat #4: RICE prioritization does not replace sprint planning or sprint estimates. If you’re tackling sprint planning, work with design and engineering to get true cost estimates so that you can sequence the work appropriately without causing bottlenecks or bugs.

Caveat #5: RICE scores should be considered as living and breathing. You will need to change these scores as you gather more information over time. It’s highly probable that impact and confidence scores will change as you iteratively deliver value to customers and users.

Caveat #6: RICE scores are not hard-and-fast sequences. Sometimes, you’ll have to ship bets that don’t align with the rank order that RICE scores suggest.

That situation isn’t an “end of the world scenario” as long as you have clear justification for why this is the case. If you do ship initiatives out of order, make sure you’ve written up clear guidance for customers and executives to understand why you’re deviating from the suggested RICE sequence.

Now our eyes are open to the potential pitfalls that come with using RICE. Let’s wrap up by discussing how to customize the RICE prioritization framework for your product org.

Potential customizations for RICE prioritization

Just because we’ve proposed a more simplified version of RICE doesn’t mean that your organization can only use this one version.

After all, product managers work in context, and therefore you should treat this in-depth guide more as a set of flexible frameworks rather than a prescriptive “one-size-fits-all” solution.

Here are some ways in which you can customize the RICE framework for your organization’s specific needs:

You could change the weighting for the different scales, e.g. use Fibonacci (1, 2, 3, 5, 8) instead of powers of two (0.5, 1, 2, 4, 8)

You could create different feature buckets, e.g. Adam Nash’s Three Buckets system of metric movers, customer requests, and customer delight

You could add additional granularity to each of the different RICE factors

From a “defaults” perspective, we don’t usually recommend bucketing since that triggers a potential apples-to-oranges evaluation problem. You’ll run into challenges identifying how to trade off initiatives across categories.

Bucketing only works if you have a clearly-defined “point allocation mix” for each bucket, e.g. in Q2 you’ve allocated 40% of bandwidth into metric movers, 20% of bandwidth into customer requests, and 40% of bandwidth into customer delighters.

We also don’t recommend adding more than 5 categories to any axis. Too many categories causes unnecessary complexity that doesn’t provide additional signal when it comes to the right sequence of work.

As a last word of advice: while you should feel free to customize the RICE model for your organization’s existing decision-making processes, make sure that whatever model you use is consistent across all product managers within your organization.

If standardized prioritization is something that your product organization would find helpful to discuss and implement, reach out to us to schedule a workshop.

Closing thoughts

Prioritization is an essential responsibility for any product manager. By deeply understanding how to prioritize effectively and how to communicate this prioritization methodology to others, you’ll reduce friction, get buy-in faster, and ship more impactful initiatives over time.

Thank you to Pauli Bielewicz, Siamak Khorrami, Goutham Budati, Markus Seebauer, Juliet Chuang, and Kendra Ritterhern for making this guide possible.